A straightforward solution for controlling AI

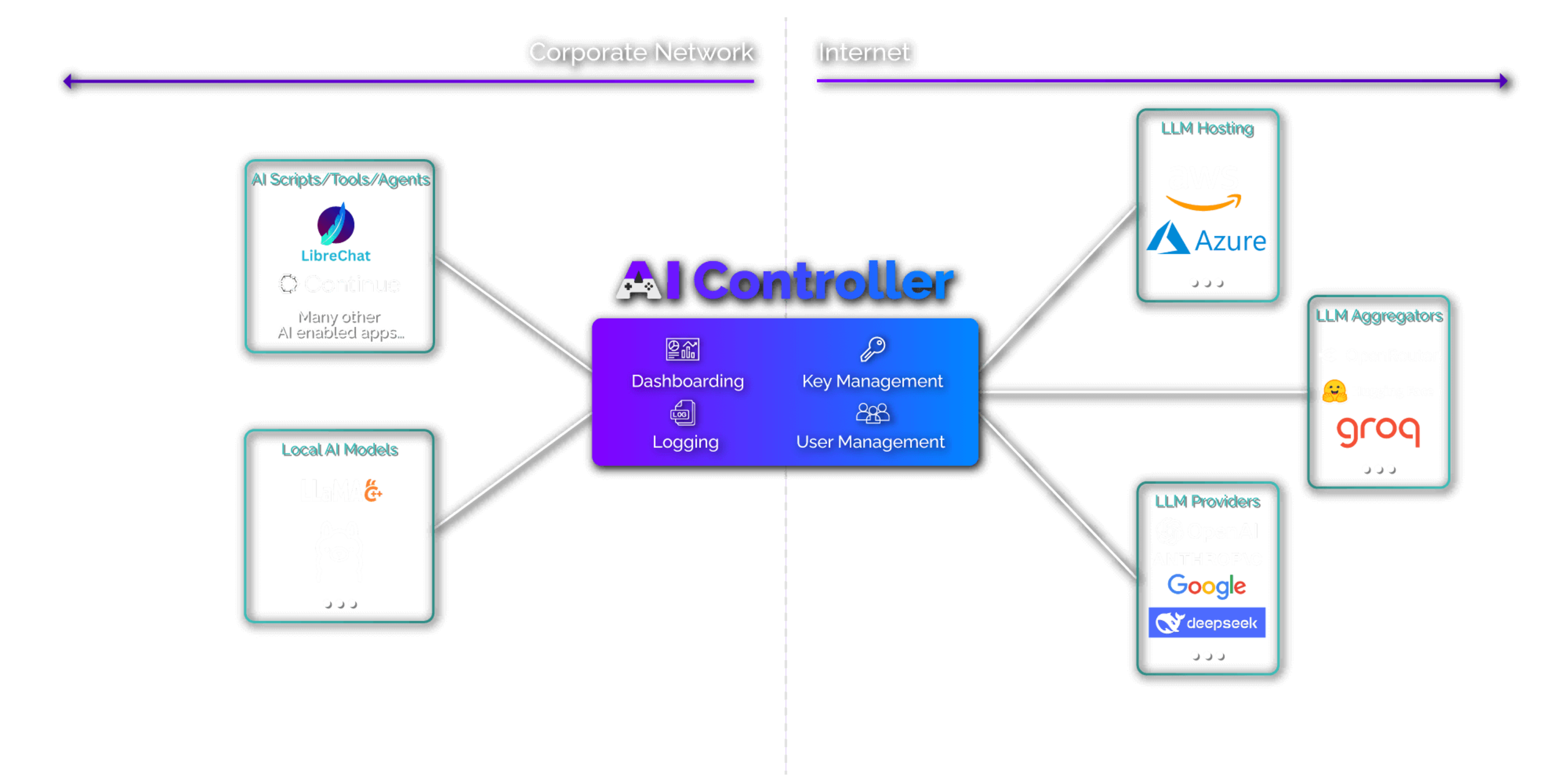

AI Controller enables IT administrators to centrally manage AI securely, track performance and costs, enforce compliance and generate reports without complexity. AI Controller delivers the basics required of an AI gateway product, providing the core middleware without duplicating the functionality in fast-developing LLMs and front-end applications.

Control AI Internet Interface.

Control use of LLMs

AI Controller provides centralized, secure and model-agnostic API management that simplifies governance and reduces administrative overhead. With built-in privacy controls, flexible authentication, and real-time cost visibility, it helps organizations protect sensitive data, prevent unauthorized access, and avoid unexpected AI spending across teams.

Control deployment and data storage

Your AI gateway, set up your way. AI Controller gives IT administration full control over how and where AI runs. Its flexible, low-overhead and future-ready architecture integrates seamlessly into existing infrastructure. AI Controller minimises setup complexity, shields sensitive data, and scales predictably as adoption grows, offering confidence from day one.

Control analysis and efficiency of LLMs

Gain deep visibility without sacrificing performance. AI Controller delivers rich, structured insights into LLM interactions with minimal overhead, enabling IT administrators to optimize use while maintaining full observability. It supports data-driven decisions and fine-tuning, ensuring fast, reliable access to AI without compromising compliance or monitoring.

Ready to Take Control?

AI Controller simplifies AI adoption, management and scalability in your organisation, securely and efficiently. Whether it is governance, performance, or cost control, AI Controller gives you full command of your AI infrastructure from day one.

Try it free for 30 days and experience how straightforward and powerful secure AI management can be.

Got questions?

AI Controller is always hosted on your own infrastructure (cloud, on-premise or air-gapped). Therefore all data stays within your environment, giving you full control and auditability.

Most teams can get AI Controller up and running in under an hour. No complicated configurations or vendor lock-ins.

Yes. AI Controller works as middleware between your current applications and LLM providers, so there’s no need to replace your existing AI tools. It offers an OpenAI-compatible REST API, supports JSON-formatted requests/responses, and includes an extensible API for seamless integration with internal systems, giving you centralized control, visibility, and security without disrupting existing workflows.

Configuration changes require AI Controller to be re-started. However, all AI Controller administration tasks (such as creation / update / deletion of Providers, Rules, Users etc.) can be performed live and do not require restarting.

AI Controller is deployed on your infrastructure (cloud, on-premise or air-gapped) ensuring all data remains in your control. Sensitive data stored in the database, such as API Keys or passwords, is stored as a SHA512 hash and the original value is not accessible, while LLM API Keys are AES-256 encrypted.

Through local response caching, connection pooling, and asynchronous request processing, AI Controller minimizes LLM response times.

Some providers have a chat UI (e.g. ChatGPT, Microsoft Copilot, Google Gemini or Anthropic Copilot) locked to their LLMs (e.g. GPT-4, Gemini or Claude). This can prevent the use of AI gateway tools as API proxies. It is simple to configure alternate chat UIs (e.g. LibreChat) to access these LLMs.

If you have other questions, please contact us.